Sometimes you need to reload the entire data set from the source storage into Snowflake. For example, you may want to fully refresh a quite large lookup table (2 GB compressed) without keeping the history. Let’s see how to do this in Snowflake and what issues you need to take into account.

Initial Load

We are going to use a sample table:

CREATE TABLE sites_info ( id INT, version INT, url STRING, agent STRING, params STRING, status STRING );

that was initially loaded by the following COPY command:

COPY INTO sites_info FROM (SELECT t.$1, t.$2, t.$3, t.$4, t.$5, t.$6 FROM @public.cloudsqale_s3/stage/sites_info/data.gz t) FILE_FORMAT=TSV; 1 Row(s) produced. Time Elapsed: 581.436s Goodbye!

COPY and Already Loaded Files

Note that if you repeat COPY command for the same file, Snowflake will skip it from loading:

+---------------------------------------+ | status | |---------------------------------------| | Copy executed with 0 files processed. | +---------------------------------------+

But if the size of the file and timestamp are changed, Snowflake will load it again. Of course, this will produce duplicate rows unless you use TRUNCATE or DELETE commands before copying the data.

Also note that if you use TRUNCATE, it deletes the load history so Snowflake will load the same file again even if it has the same size and timestamp!

TRUNCATE, COPY and Transactions

So if you first truncate the table and load data:

TRUNCATE TABLE sites_info; COPY INTO sites_info FROM (SELECT t.$1, t.$2, t.$3, t.$4, t.$5, t.$6 FROM @public.cloudsqale_s3/stage/sites_info/data.gz t) FILE_FORMAT=TSV;

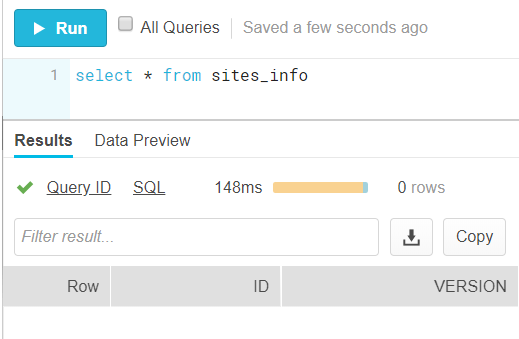

You can notice that the COPY took about 10 minutes, and during this time all other queries will see 0 rows in the table because it was truncated:

This can affects your reports, introduce many discrepancies and so on.

Hopefully, Snowflake allows you to put both TRUNCATE and COPY statements into a transaction block:

BEGIN; TRUNCATE TABLE sites_info; COPY INTO sites_info FROM (SELECT t.$1, t.$2, t.$3, t.$4, t.$5, t.$6 FROM @public.cloudsqale_s3/stage/sites_info/data.gz t) FILE_FORMAT=TSV; COMMIT;

Now you can query the table successfully during the COPY command execution: