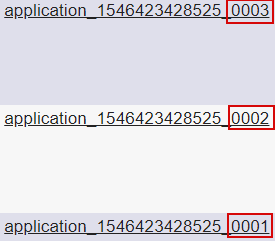

When you run a job in Hadoop you can notice the following error: Application with id 'application_1545962730597_2614' doesn't exist in RM. And later looking at the YARN Resource Manager UI at http://<RM_IP_Address>:8088/cluster/apps you can see low Application ID numbers:

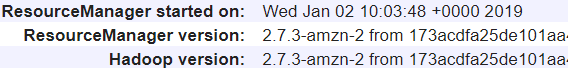

Additionally you can look at http://<RM_IP_Address>:8088/cluster/cluster to review the Resource Manager start timestamp:

This means that your YARN Resource Manager was silently restarted in Amazon EMR, and some long-running jobs can be just killed affecting your SLAs.

In my case the YARN Resource Manager process was killed due to out of memory error. You can go to /var/log/hadoop-yarn directory on your EMR master node, and check the Resource Manager STDOUT output file yarn-yarn-resourcemanager-<ip-address>.out.1.gz (since the Resource Manager was already restarted, you need to look at the previous version):

Dec 28, 2018 2:05:32 AM com.sun.jersey.guice.spi.container.GuiceComponentProviderFactory register INFO: Registering org.apache.hadoop.yarn.server.resourcemanager.webapp.JAXBContextResolver as a provider class ... # java.lang.OutOfMemoryError: Java heap space # -XX:OnOutOfMemoryError="kill -9 %p kill -9 %p" # Executing /bin/sh -c "kill -9 22618 kill -9 22618"...

You can run the following command on the YARN master node to check the Resource Manager memory settings (-Xmx option):

$ ps aux | grep resourcemanager yarn 35344 14.2 7.6 6835112 4723396 ? Sl Jan01 677:12 /usr/lib/jvm/java-openjdk/bin/java -Dproc_resourcemanager -Xmx3317m -XX:OnOutOfMemoryError=kill -9 %p -XX:OnOutOfMemoryError=kill -9 %p -Dhadoop.log.dir=/var/log/hadoop-yarn -Dyarn.log.dir=/var/log/hadoop-yarn ... org.apache.hadoop.yarn.server.resourcemanager.ResourceManage

As you can see the Resource Manager was started with -Xmx3317m, but you can change this setting for the next restart using the YARN_RESOURCEMANAGER_HEAPSIZE environment option in /etc/hadoop/conf/yarn-env.sh.

Additionally you can use YARN restart feature available since Hadoop 2.6 that allows you to preserve all jobs that were running during the Resource Manager restart.

You can set the following option in /etc/hadoop/conf/yarn-site.xml file:

<property> <name>yarn.resourcemanager.recovery.enabled</name> <value>true</value> </property>

With this option the Resource Manager doesn’t need to kill the applications during a restart, and applications can simply re-sync back with the Resource Manager and resume their work.

Current YARN Resource Manager Heap Usage

Knowing the Resource Manager process ID, you can check the current Java heap usage:

$ sudo jstat -gc 35344 S0C S1C S0U S1U EC EU OC OU MC MU ... 102400 104448 0.0 0.0 795136 687850 2265088 2264931 50008 48928 ...

The total heap is combined from Survivor Space 0 and 1 (S0C, S1C), Eden (EC), Old generation (OC) and Metaspace (MC): S0C + S1C + EC + OC + MC = 102400 + 104448 + 795136 + 2265088 + 50008 = 3317m

The used space is S0U + S1U + EU + OU + MU = 0 + 0 + 687850 + 2264931 + 48928 = 3002m (90.5%)