I had a SQL query that failed on one of the Presto 0.208 clusters with the “Query exceeded per-node total memory” (com.facebook.presto.ExceededMemoryLimitException) error. How can you solve this problem? I will consider a few possible solutions, but firstly let’s review the memory allocation in Presto.

Memory Pools

Presto 0.208 has 3 main memory areas: General pool, Reserved pool and Headroom.

All queries start running and consuming memory from the General pool. When it is exhausted, Presto selects a query that currently consumes the largest amount of memory and moves it into the Reserved pool on each worker node.

To guarantee the execution of the largest possible query the size of the Reserved pool is defined by query.max-total-memory-per-node option.

But there is a limitation: only one query can run in the Reserved pool at a time! If there are multiple large queries that do not have enough memory to run in the General pool, they are queued i.e. executed one by one in the Reserved pool. Small queries still can run in the General pool concurrently.

Headroom is an additional reserved space in the JVM heap for I/O buffers and other memory areas that are not tracked by Presto. The size of Headroom is defined by the memory.heap-headroom-per-node option.

The size of the General pool is calculated as follows:

General Pool = Java Max Heap Size (Xmx) - Reserved Pool - Headroom

There is an option to disable the reserved pool, and I will consider it as well.

Initial Attempt

In my case the query was:

SELECT country, category, product, COUNT(DISTINCT user_id), SUM(items) FROM orders GROUP BY CUBE country, category, product;

and it failed after running for 2 minutes with the following error:

Query exceeded per-node total memory limit of 10GB

[Allocated: 9.99GB, Delta: 16.70MB, Top Consumers: {MarkDistinctOperator=9.57GB,

ScanFilterAndProjectOperator=331.08MB, ExchangeOperator=43.20MB}]

The initial cluster configuration:

jvm.config: -Xmx54g config.properties: query.max-total-memory-per-node=10GB query.max-memory-per-node=10GB memory.heap-headroom-per-node=9GB

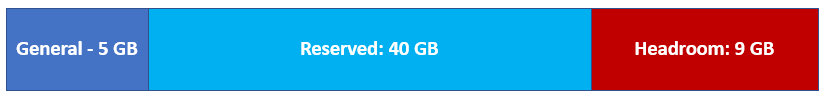

With this configuration the size of the Reserved pool is 10 GB (it is equal to query.max-total-memory-per-node), the General pool is 35 GB (54 – 10 – 9):

The query was executed in the General pool but it hit the limit of 10 GB defined by query.max-total-memory-per-node option and failed.

resource_overcommit = true

There is the resource_overcommit session property that allows a query to overcome the memory limit per node:

set session resource_overcommit = true;

Now the query ran about 6 minutes and failed with:

Query exceeded per-node total memory limit of 35GB

[Allocated: 35.00GB, Delta: 1.04MB, Top Consumers: {MarkDistinctOperator=34.31GB,

ScanFilterAndProjectOperator=592.08MB, PartitionedOutputOperator=106.37MB}]

It was a single query running on the cluster, so you can see that it was able to use the entire General pool of 35 GB per node, although it still was not enough to compete successfully.

Increasing query.max-total-memory-per-node

Since 35 GB was not enough to run the query, so let’s try to increase query.max-total-memory-per-node and see if this helps complete the query successfully (the cluster restart is required):

jvm.config: -Xmx54g config.properties: query.max-total-memory-per-node=40GB query.max-memory-per-node=40GB memory.heap-headroom-per-node=9GB

With this configuration the size of the Reserved pool is 40 GB, the General pool is 5 GB (54 – 40 – 9):

Now the query ran 7 minutes and failed with:

Query exceeded per-node total memory limit of 40GB

[Allocated: 40.00GB, Delta: 1.14MB, Top Consumers: {MarkDistinctOperator=39.28GB,

ScanFilterAndProjectOperator=594.21MB, PartitionedOutputOperator=137.71MB}]

It is a little bit funny but with this configuration setting resource_overcommit = true makes things worse:

set session resource_overcommit = true;

Now the query quickly fails within 34 seconds with the following error:

Query exceeded per-node total memory limit of 5GB

[Allocated: 5.00GB, Delta: 1.07MB, Top Consumers: {MarkDistinctOperator=4.65GB,

ScanFilterAndProjectOperator=260.15MB, ExchangeOperator=42.83MB}]

resource_overcommit forces to use the General pool only, the query cannot migrate to the Reserved pool anymore, but the General pool is just 5 GB now.

But there is a more serious problem. The General pool of 5 GB and the Reserve pool of 45 GB allows you to run larger queries (up to 45 GB per node), but significantly reduces the concurrency of the cluster as the Reserved pool can run only one query at a time, and the General pool that can run queries concurrently is too small now. So you can more queued queries waiting for the Reserved pool availability.

experimental.reserved-pool-enabled = false

To solve the concurrency problem and allow running queries with larger memory Presto 0.208 allows you to disable the Reserved pool (it is going to be default in future Presto versions):

experimental.reserved-pool-enabled=false query.low-memory-killer.policy=total-reservation-on-blocked-nodes

Now you can set an even larger value for the query.max-total-memory-per-node option:

jvm.config: -Xmx54g config.properties: query.max-total-memory-per-node=45GB query.max-memory-per-node=45GB memory.heap-headroom-per-node=9GB experimental.reserved-pool-enabled=false query.low-memory-killer.policy=total-reservation-on-blocked-nodes

With this configuration all memory except Headroom is used for the General pool:

But the query still fails after running for 7 minutes 30 seconds:

Query exceeded per-node total memory limit of 45GB

[Allocated: 45.00GB, Delta: 848.93kB, Top Consumers: {MarkDistinctOperator=44.55GB,

ScanFilterAndProjectOperator=352.99MB, PartitionedOutputOperator=125.44MB}]

So there is still not enough memory.

experimental.spill-enabled=true

Since we cannot allocate more memory on nodes (hit the physical memory limit), let’s see if the spill-to-disk feature can help us (the cluster restart is required):

config.properties: experimental.spill-enabled=true experimental.max-spill-per-node=80GB experimental.query-max-spill-per-node=80GB

Unfortunately this does not help, the query still fails with the same error:

Query exceeded per-node total memory limit of 45GB

[Allocated: 45.00GB, Delta: 1.03MB, Top Consumers: {MarkDistinctOperator=44.31GB,

ScanFilterAndProjectOperator=593.45MB, PartitionedOutputOperator=145.84MB}]

The reason is that not all operations can be spilled to disk, and as of Presto 0.208 COUNT DISTINCT (MarkDistinctOperator) is one of them.

So looks like the only solution to make this query work is to use compute instances with more memory or add more nodes to the cluster.

In my case I had to increase the cluster to 5 nodes to run the query successfully, it took 9 minutes 45 seconds and consumed 206 GB of peak memory.

Checking Presto Memory Settings

If you do not have access to jvm.config and config.properties configuration files or server.log you can query the JMX connector to get details about the memory settings.

To get the JVM heap size information on nodes (max means -Xmx setting):

SELECT heapmemoryusage FROM jmx.current."java.lang:type=memory";

heapmemoryusage

---------------

contents={committed=25341984768, init=503316480, max=57982058496, used=5437672232}

...

To get the Reserved and General pool for each node in the cluster:

SELECT maxbytes, node, object_name FROM jmx.current."com.facebook.presto.memory:name=*,type=memorypool"; maxbytes node object_name ---------------------------------------- 15,452,177,408 i-0482e com.facebook.presto.memory:type=MemoryPool,name=reserved 25,760,813,056 i-0482e com.facebook.presto.memory:type=MemoryPool,name=general 6,751,990,579 i-01789 com.facebook.presto.memory:type=MemoryPool,name=reserved 9,002,654,106 i-01789 com.facebook.presto.memory:type=MemoryPool,name=general 15,452,177,408 i-03177 com.facebook.presto.memory:type=MemoryPool,name=reserved 25,760,813,056 i-03177 com.facebook.presto.memory:type=MemoryPool,name=general ...

When the Reserved pool is disabled the query returns information for the General pool only:

SELECT maxbytes, node, object_name FROM jmx.current."com.facebook.presto.memory:name=*,type=memorypool"; maxbytes node object_name ----------------------------------------- 48,318,382,080 i-0001c com.facebook.presto.memory:type=MemoryPool,name=general 40,587,440,948 i-0864a com.facebook.presto.memory:type=MemoryPool,name=general 48,318,382,080 i-00b7e com.facebook.presto.memory:type=MemoryPool,name=general ...

Conclusion

- You can try to increase

query.max-total-memory-per-nodeon your cluster, but to preserve the concurrency make sure that the Reserved pool is disabled (this will be default in Presto soon). - Enabling the disk-spill feature is helpful, but remember that not all Presto operators support it.

- Sometimes only using instances with more memory or adding more nodes can help solve the memory issues.