I already wrote about recovering the ghost nodes in Amazon EMR clusters, but in this article I am going to extend this information for EMR clusters configured for auto-scaling.

When you run a cluster in auto-scaling mode you can see many more instances marked as RUNNING/TERMINATED in EMR, or ACTIVE/LOST in Hadoop YARN.

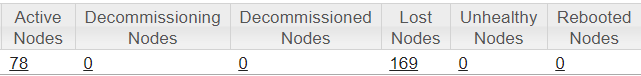

Here is a snapshot from my cluster at some point:

Now the large number of LOST nodes does not necessarily mean that the cluster is unhealthy and requires the attention. The LOST nodes are mostly the nodes removed from the cluster during its down scale operation.

In my case, the aws emr list-instances --cluster-id command showed even more nodes – 1,101: 101 RUNNING and 1,000 TERMINATED.

Here we can see 22 ghost nodes appear: YARN reports 78 ACTIVE nodes, while EMR reports 100 RUNNING (+1 Master Node). It means we still pay for 22 permanently running but not used EC2 instances, and it is about $500 per day (!) until we fix this problem.

First, let’s find all running nodes in EMR using the following Python code:

import sys

import subprocess

import json

cluster_id = 'set your cluster ID here'

emr_nodes_list = []

emr_nodes = subprocess.check_output("aws emr list-instances --cluster-id=" + cluster_id, shell = True)

for i in json.loads(emr_nodes)["Instances"]:

emr_node = {

"State" : i["Status"]["State"],

"Ec2InstanceId": i["Ec2InstanceId"],

"PrivateDnsName": i["PrivateDnsName"]

}

if i["Status"]["State"] == 'RUNNING':

emr_nodes_list.append(emr_node)

Sample content of emr_nodes_list variable:

[{'PrivateDnsName': 'ip-10-95-17-215.ec2.internal', 'State': u'RUNNING', 'Ec2InstanceId': 'i-0518'},

{'PrivateDnsName': 'ip-10-95-18-101.ec2.internal', 'State': u'RUNNING', 'Ec2InstanceId': 'i-0866'},

...

{'PrivateDnsName': 'ip-10-95-20-169.ec2.internal', 'State': u'RUNNING', 'Ec2InstanceId': 'i-03b6'}]

Then we can get a list of nodes in YARN:

import requests

cluster_ip = 'set your cluster IP here'

yarn_nodes = {}

for i in requests.get('http://' + cluster_ip + ':8088/ws/v1/cluster/nodes').json()["nodes"]["node"]:

yarn_nodes[i["nodeHostName"]] = i["state"]

And finally you can get nodes that are running but not present in Hadoop YARN or have the LOST state:

for i in emr_nodes_list:

ip = i["PrivateDnsName"]

if ip not in yarn_nodes:

print i

elif yarn_nodes[ip] == 'LOST':

print "LOST - " + str(i)

Sample output:

LOST - {'PrivateDnsName': 'ip-10-95-5-215.ec2.internal', 'State': 'RUNNING', 'Ec2InstanceId': 'i-09d8'}

LOST - {'PrivateDnsName': 'ip-10-95-5-245.ec2.internal', 'State': 'RUNNING', 'Ec2InstanceId': 'i-0f2c'}

LOST - {'PrivateDnsName': 'ip-10-95-5-157.ec2.internal', 'State': 'RUNNING', 'Ec2InstanceId': 'i-0946'}

LOST - {'PrivateDnsName': 'ip-10-95-5-113.ec2.internal', 'State': 'RUNNING', 'Ec2InstanceId': 'i-0ff1'}

...

In my case all 22 nodes had the LOST state.

You can use aws ec2 describe-instance-status command to see the status of each EC2 instance in the list above:

$ aws ec2 describe-instance-status --instance-ids i-073d

...

"SystemStatus": {

"Status": "ok",

"Details": [{"Status": "passed", "Name": "reachability"}]

},

"InstanceStatus": {

"Status": "impaired"

"Details": [{"Status": "failed", "Name": "reachability"}]

...

So the node is unhealthy. You can manually terminate each impaired instance:

aws ec2 modify-instance-attribute --no-disable-api-termination --instance-id i-073d aws ec2 terminate-instances --instance-ids i-073d

Now we are good and do not need to pay for unused resources.

P.S. In my cluster, these 22 impaired nodes prevented the cluster from further downscaling, so it always ran no less than 100 nodes. After removing the impaired nodes the cluster could successfully downscale to 60 nodes and below.